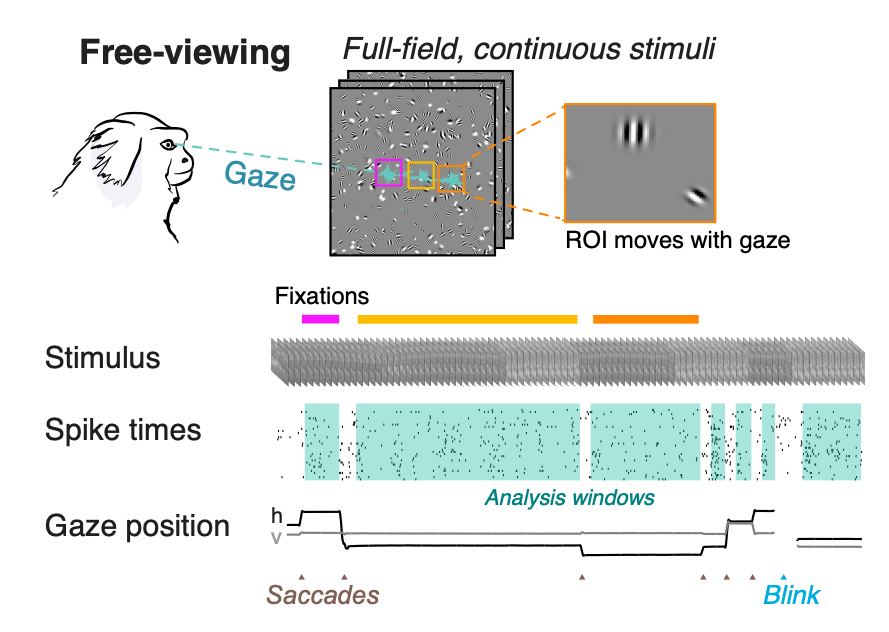

Hi, I'm Gabe SarchI'm a fifth-year Ph.D. student at Carnegie Mellon University in the Neural Computation and Machine Learning joint PhD program under the supervision of Dr. Katerina Fragkiadaki and Dr. Mike Tarr. My work is supported by the National Science Foundation Graduate Research Fellowship. I received a B.S. in Biomedical Engineering from the University of Rochester, where I studied the marmoset visual system under Dr. Jude Mitchell. I previously held research positions at Microsoft Research and Yutori AI. Starting Fall 2025: I will join Princeton University's PLI as a Postdoctoral Fellow. |

|

Research

Grounded Reinforcement Learning for Visual ReasoningGH Sarch S Saha N Khandelwal A Jain MJ Tarr A Kumar K FragkiadakiPreprint |

Grounding Task Assistance with Multimodal Cues from a Single DemonstrationGH Sarch B Kumaravel S Ravi V Vineet A WilsonACL 2025 findings |

|

|

Open-Ended Instructable Embodied Agents with Memory-Augmented Large Language ModelsGH Sarch Y Wu MJ Tarr K FragkiadakiEMNLP 2023 findings🔥[NEW!] in ICLR 2024 Workshop on LLM Agents: HELPER-X achieves Few-Shot SoTA on 4 embodied AI benchmarks (ALFRED, TEACh, DialFRED, and the Tidy Task) using a single agent, with just simple modifications to the original HELPER. |

|

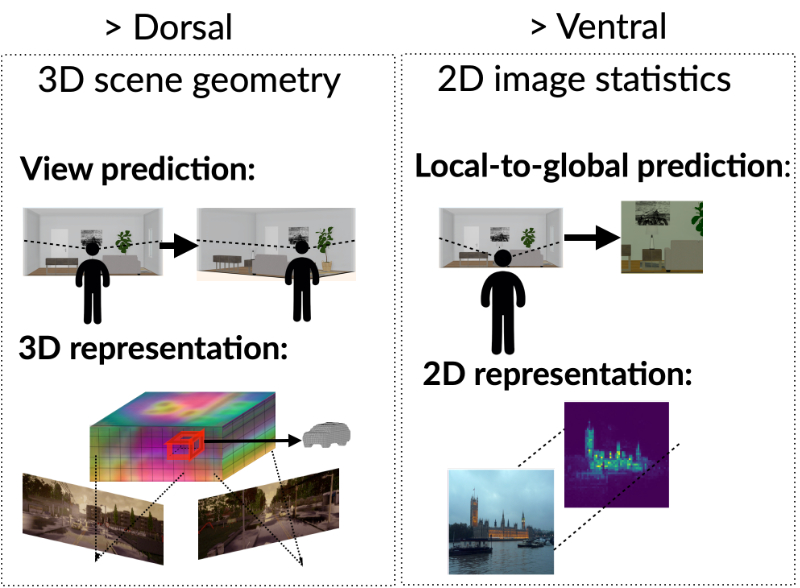

Brain Dissection: fMRI-trained Networks Reveal Spatial Selectivity in the Processing of Natural ImagesGH Sarch MJ Tarr K Fragkiadaki* L Wehbe*NeurIPS 2023 |

|

|

|

|

Move to See Better: Towards Self-Improving Embodied Object DetectionGH Sarch* Z Fang* A Jain* AW Harley K FragkiadakiBMVC 2021 |

Paper

Paper

Project Page

Project Page

Code

Code